There are all kinds of ways to play the Docker game and, obviously, no one of them is going to be right for every use case. So what I’m going to do here is give you a brief functional overview of each of the most obvious management options in a way that can help you choose for yourself and save you a whole lot of time and frustration in the process. That way you get to look smart and no one has to know it was me all along.

First though, here’s the sentence with which every article on any subject even remotely related to Docker must begin: Over the past n years (where n < 6), container technologies, and Docker in particular, have become dominant tools in the application provision world.

Great. With that out of the way, we can get down to business. So you’re considering delivering your app or networked service through Docker containers…or at least giving them a good look. I certainly won’t argue with you: that’s probably a good choice.

Now presumably you already know that Docker Engine is the open source software environment that lets you virtualize bits and pieces of a host hardware system until they look and act just like real servers. Docker is now available in either its free (Community Edition) of commercially supported (Enterprise Edition) versions.

No doubt you also know that invoking Docker Engine from your command line using things like:

docker ps

docker images

and:

docker network inspect

…will get stuff done. Not so comfortable with all that? There’s some intro-level material you might like that’s included in my Docker-oriented courses over at Pluralsight.

All that will work just fine while you’re just learning your way around. But once you’re ready to start planning a robust and highly scalable deployment – complete with complex configurations that might include microservices and network bridges – then the landscape quickly changes. The question is not so much “how”, but “where and which”: Do you have the compute and network resources to run your app locally, or will you need to find a host? Should you do it yourself or choose a managed service on a public cloud like AWS’s Elastic Beasntalk?

And then what about administration? Are you a hands-on type or do you prefer standing a layer or two back and letting management tools like Kubernetes or Docker swarm mode do some of the heavy lifting for you? Or how about two or three layers back and going with Ansible or Puppet?

Let’s divide things into three categories: repository tools for storing and managing Docker images, administration frameworks for defining, launching, and managing Docker containers through their life cycles, and then some command line and configuration automation management tools.

1. Image Registries

Docker Hub

For most people, the obvious first place to look for Docker images – the packages containing the operating systems and software used to run containers – is Docker Hub. Provided by Docker itself, Docker Hub holds a vast collection of images that come pre-loaded to support all kinds of application projects. You can find and research images on the hub.docker.com web site, and then pull them directly into your own Docker Engine environment.

docker pull ubuntuOnce you begin creating your own images, you can safely store as many of them as you like in public repositories on Docker Hub. In addition, they’ll allow you one private repo for free, and more at a rate of roughly one dollar per repo. Perhaps the nicest thing about Docker Hub is the way it works seamlessly with just about anything else connected to Docker, including public cloud providers like AWS and infrastructure management services like Docker Cloud.

The separate Docker Store service allows you to publish pre-certified images and plugins to satisfy demand for access to trusted resources.

EC2 Container Registry (ECR)

Amazon’s AWS knows all about the power and potential of Docker and wants in on the game. As part of their efforts to open up their cloud ecosystem to as much Docker business as possible, they’ve built their own registry to go with their EC2 Container Service platform: ECR. Images can be pushed, pulled, and managed through the AWS GUI or CLI tool. Permissions policies can closely control image access only to the people you select.

The limitation? ECR is obviously designed to work best with infrastructure running on AWS-based services like ECS and Elastic Beanstalk.

Docker Registry

If you need to maintain your images a bit closer to home – either for security or practical reasons – then you’ll want to know about Docker’s freely available Docker Registry. You designate a registry server with access to and from your other network assets, install and then enable the docker-registry package, tag images so they’re pointed to your local registry, and you’ve got yourself a real, live private repo.

dpkg -i docker-registry_2.4.1~ds1-2_amd64.deb

systemctl enable docker-registry

docker tag hello-world localhost:5000/hello-world:latestThe images themselves are stored deep within the file system on your server, but they’re available through the same CLI tools as those on Docker Hub. Worried about securing your images? Docker Registry lets you apply SSL/TLS certificates and control access by enforcing login authentication to your site.

The Docker Trusted Registry is Docker’s commercial version of the Docker Registry. In exchange for monthly or annual charges, you get extra bells and whistles including support, a browser-based GUI, and LDAP/AD integration.

2. Administration Frameworks

Even once you’ve graduated beyond the just-seeing-how-stuff-works stage, you might still want to keep an active Docker deployment on-premises: Perhaps your clients are all local or your projected workload isn’t all that heavy. Or perhaps you’re just paranoid about security. And by “paranoid about security” of course, I mean “well informed about the current state of network vulnerabilities”.

One way to “stay local” is to just continue with what you’ve been doing until now. As long as you take resource security and capacity considerations into account, there’s no reason to abandon the good old Community Edition Docker Engine you’ve already got installed.

However, if the level of complexity you think you’re going to face leaves you feeling a bit lost, then you might want to consider upgrading to a commercial environment that, along with ongoing support, can offer browser-based admin consoles. Either way though, you’re going to need to provide your own hosting environment where your containers will run. That might be your local servers, or virtual machines running within a public cloud like AWS or Azure.

Docker Datacenter

You set up Datacenter (now marketed as part of Docker Enterprise Edition) by downloading and installing the regular Docker Engine on your local server, along with a second package called the Docker Universal Control Plane (UCP). The UCP provides a browser interface that permits centralized management for all the images, apps, and networks that make up your infrastructure. Security, too, is handled through the interface.

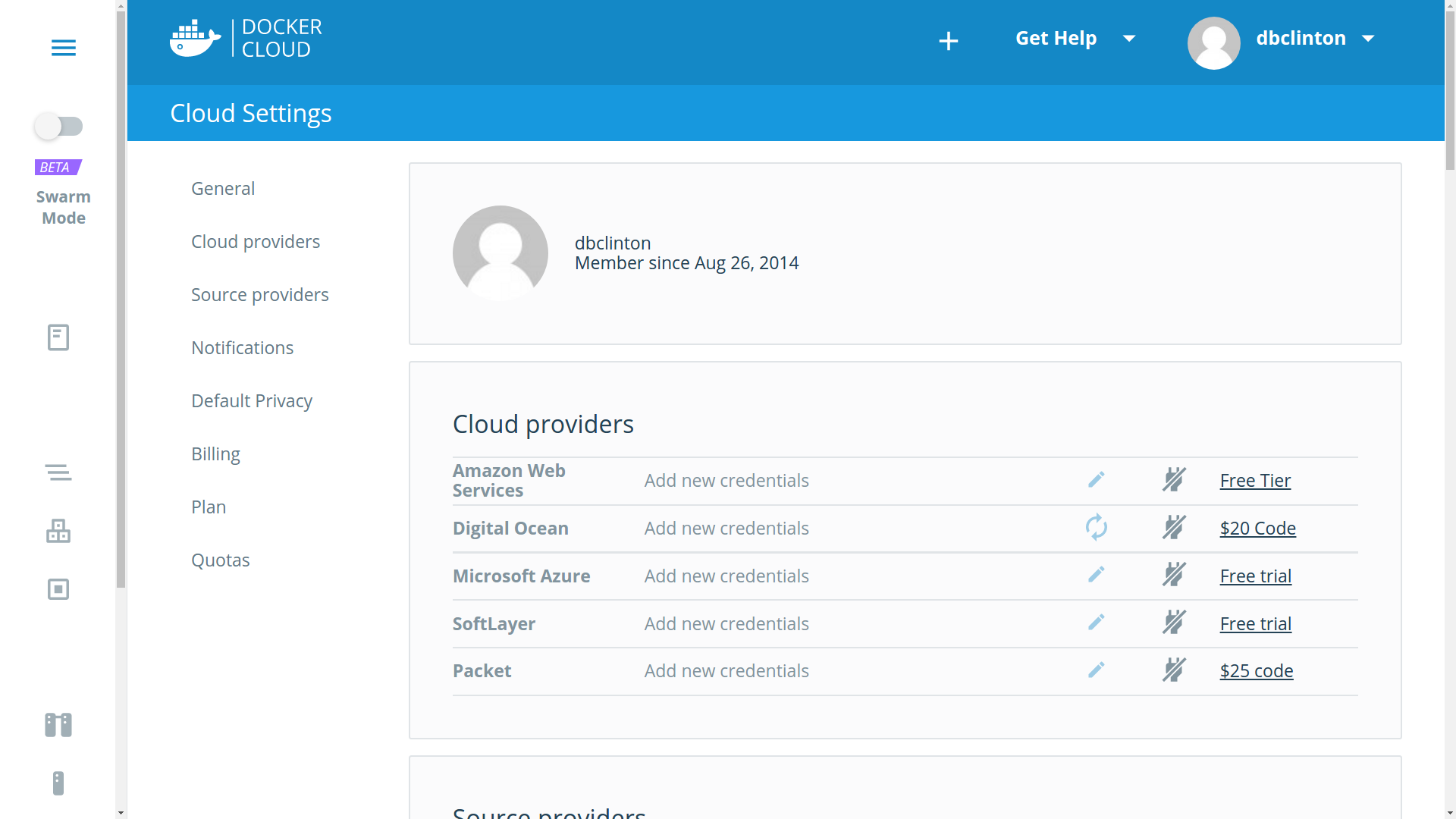

Docker Cloud

Much like Docker Datacenter (which is also an official Docker product), Docker Cloud offers a GUI, browser-based console for managing all aspects of your Docker deployments. This includes administration for your host nodes running in public clouds. The big difference is that, unlike Datacenter, the Docker Cloud administration service is hosted from the cloud.docker.com site: there’s no server software to install on your own equipment.

Docker Cloud – cloud provider settings

It works by entering authentication information for your cloud provider accounts (like AWS) or by installing the Docker Cloud Agent on any Linux or Windows machine running anywhere where there’s network connectivity. Clicking the “Bring your own node” button in the Node Clusters window will display a Linux command to download and install the agent that might look something like this:

curl -Ls https://get.cloud.docker.com/ | sudo -H sh -s 90b501cb04e344bfbf76890a09362c39Docker Cloud organizes resources into node clusters, which are groups of individual nodes being managed as part of a single service, all dedicated to a unified deployment goal.

I think that part of the reason Docker continues to promote two such similar services (Datacenter and Cloud) goes a couple of years back to when Docker purchased a company called Tutum and renamed their web-based product Docker Cloud. Tutum already had a happy customer base and a fairly successful business model, so there was no reason to shut it down. In any case, both work, so just pick whichever one rings your bell.

AWS EC2 Container Service (ECS)

Besides the ECR image registry, AWS has created its own full infrastructure for both hosting and managing Docker container clusters. ECS works by provisioning a purpose-built EC2 instance with both Docker Engine and an ECS agent installed. Using either the ECS console or the AWS CLI, you can define, launch, and manage containers on that EC2 instance.

aws ecs describe-clustersTo be honest, figuring out how all the many ECS pieces fit together can be a tough task. My “Using Docker on Amazon Web Services” course on Pluralsight devotes some time to explaining how the parts work. Here’s the short version:

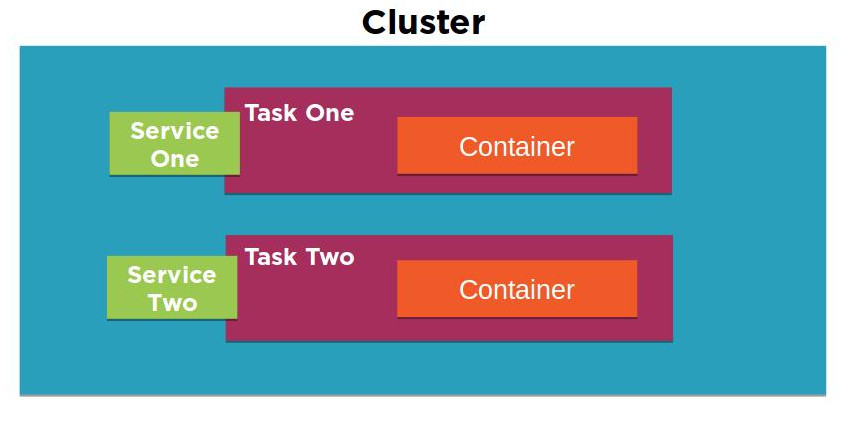

- Tasks: metadata defining an application and its network, storage, and security environment

- Services: software that launches, monitors, and controls your containers

- Containers: definitions for the machines that will run a task

- Clusters: organizing structures for tasks and services

A diagram of the EC2 Container Service architecture

AWS Elastic Beanstalk

Elastic Beanstalk effectively sits on top of ECS, allowing you to deploy your application across all the AWS resources normally used by ECS, but with virtually all of the logistics neatly abstracted away. Effectively, all you need in order to launch a fully scalable, complex microservices environment is a declarative JSON-formatted script in a file called Dockerrun.aws.json. You can either upload your script to the GUI or, from the AWS Beanstalk command line interface, run it using:

eb runAnd that’s it. No really.

I should mention that Dockerrun.aws.json files come in two flavors: V1 for single container deployments, and V2 for multiple containers. It’s also worth noting that one big advantage of using the CLI over the browser version is how much easier it can make remote SSH logins to the EC2 host and administration tasks.

Here’s something else to thing about: the first seventeen chapters of my “Learn Amazon Web Services in a Month of Lunches” book traced, step-by-step, the construction of a highly available, scalable, and secure WordPress site. For chapter 19 – just to quickly illustrate how it works – I created a 20-line Dockerrun.aws.json file that did pretty much exactly the same thing…but in just five minutes.

Now that’s not to say that the book’s first 17 chapters were waste of time! In fact, without understanding how each separate AWS service works you wouldn’t fully grasp what it was that Beanstalk actually accomplished. And you’ll certainly miss out on all kinds of functionality that can take you way beyond the things that Beanstalk can deliver. But it sure does say something about the power of scripted deployments, doesn’t it?

3. Management tools

Docker Swarm Mode

Although it’s now a part of Docker Engine right out of the box, perhaps because it’s still undergoing steady change, Docker swarm mode somehow has the flavor of a standalone product. The idea is that you can designate one of your servers (known as a node) as a manager:

docker swarm init…and other servers as clients:

docker swarm joinFrom there, using “docker service” commands from the manager will create and administrate clusters of Docker containers as services, and automatically and efficiently spread the containers among all of your available servers, no matter where they might live. You should try this out for yourself just for the thrill of running a simple “service scale” command and seeing the proper amount of containers magically and instantly appear across your network.

docker service create -p 80:80 --name webserver nginx

docker service scale webserver=5I dedicated part of my Pluralsight “Using Docker with AWS Elastic Beanstalk” course to demonstrating Docker Swarm in action. Take a look if you’re interested.

Kubernetes

Like Swarm, Google’s Kubernetes is also very good at efficiently managing large container clusters. And to say that Kubernetes is popular is like saying rain is wet. Duh.

Kubernetes organizes resources into pods, which themselves are made up of interconnected containers running individual microservices. You should think of a pod as being entirely disposable, its function instantly replaceable by others awaiting their chance to enter this world. In fact, pods are created and destroyed according to the needs defined on the Master node by things like schedulers and replication controllers, all of which can, in turn, be managed by the kubectl program. Pods, and their containers, run on serversknown as worker nodes running their own instances of Docker Engine.

I don’t know about you, but I find it both confusing and annoying that every single IT platform chooses to refer to the constituent elements by different – but often only slightly different – names. There oughta be a law.

Deployment Automation tools

I can’t walk away from a review article like this without at least mentioning how you can use just about any of the popular deployment orchestration tools like Ansible, Jenkins, and Puppet to automate your Docker environments. Diving into the fine details would take me far beyond my original plan for this article, so just pick your favorite tool and document up.